Artificial intelligence describes computer programs that can carry out sophisticated operations previously limited to human performance, such as problem-solving, thinking, and decision-making.

These days, “AI” refers to a broad spectrum of technologies that underpin many of the products and services we use daily, ranging from chatbots that offer real-time customer care to apps that suggest TV series. However, do any of these genuinely represent artificial intelligence in the sense that most of us understand it? If not, what makes us use the term so frequently?

You will learn more about artificial intelligence, its various types, and its functions in this guide. Furthermore, you will find some of the advantages and risks associated with AI and investigate adaptable course options to help you deepen your understanding of the field.

Table of Contents

What is artificial intelligence?

Artificial intelligence (AI) is the technology that enables machines and computer devices to act like humans in problem solving, decision-making, and creativity.

AI-integrated apps and devices can see and identify objects. They can speak, understand, and answer in human language. They can learn from new experiments and information. He gives comprehensive explanations to users and pros.

Self-driving cars are a classical example of a technology that can do stuff on its own, without any help or intelligence from a human being.

But in 2024, most AI researchers, professionals, and headlines are talking about progress in generative AI (gen AI), a technology that may generate new text, images, videos, and other types of content.

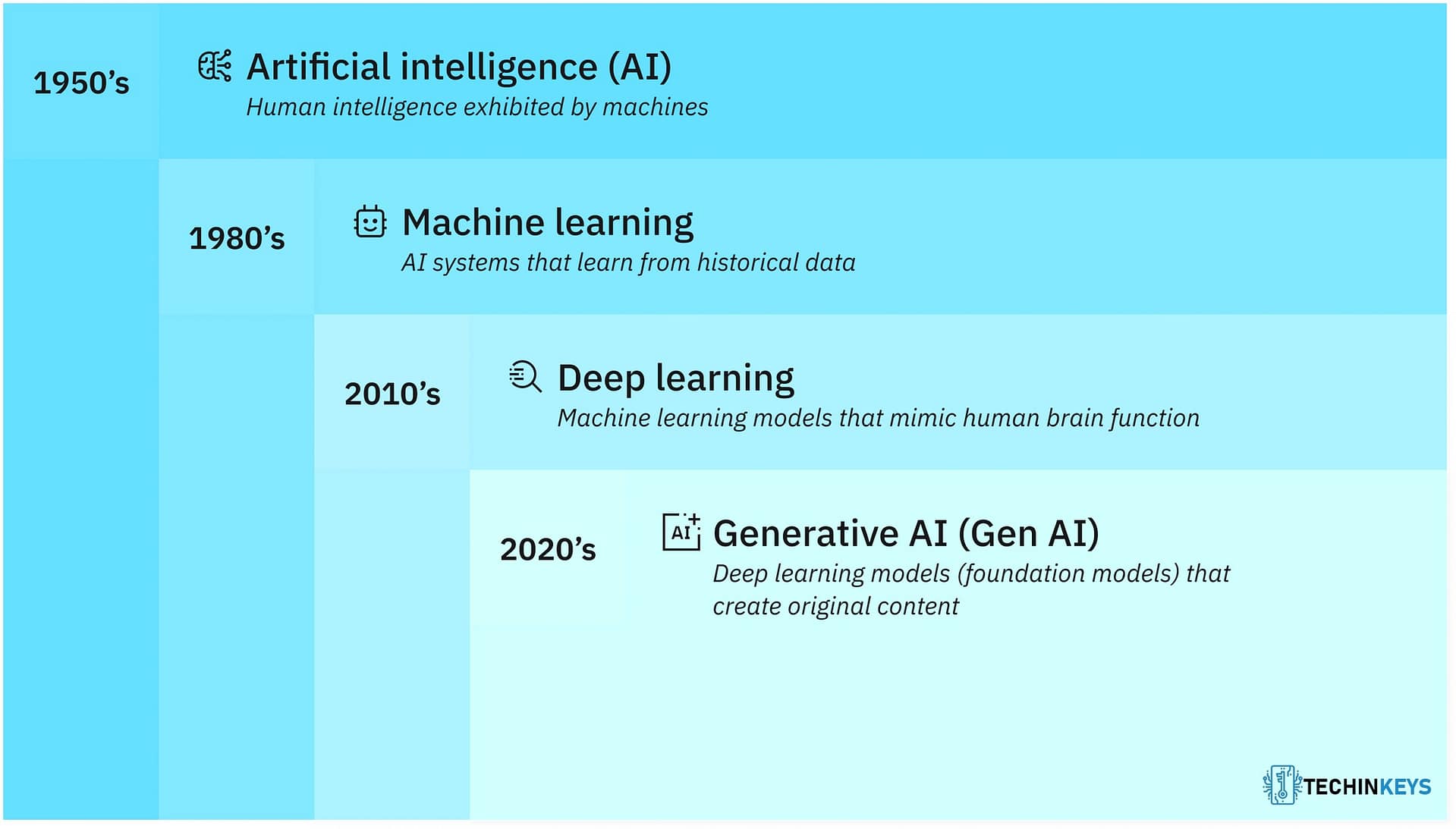

There are two main types of AI that you need to know about before you can fully understand generative AI: machine learning (ML) and deep learning.

Machine Learning

An easy way to think about AI is as a group of ideas that have grown and changed over more than 70 years:

Machine learning is just beneath AI. It builds models by teaching an algorithm to use data to make predictions or choices. It includes many methods that let machines learn from data and draw conclusions from it without being specifically programmed to do certain things.

Machine learning has a lot of different methods and algorithms, such as decision trees, random forests, support vector machines (SVMs), k-nearest neighbor (KNN), clustering, and more. Every one of these methods works best with certain types of data and situations.

However, a neural network (or artificial neural network) is one of the most well-known types of machine learning methods. The structure and operation of neural networks are based on those of the human brain. Neural networks are made up of layers of nodes that are connected to each other, like neurons. These nodes work together to process and study large amounts of data. Finding complex patterns and relationships in large amounts of data is a good job for neural networks.

Supervised learning is the easiest type of machine learning. It uses labeled data sets to teach algorithms how to correctly sort data or guess what will happen. People pair each training case with an output label in supervised learning. The model needs to learn how to connect inputs and outputs in the training data so it can guess what labels will be on new data it hasn’t seen yet.

Deep learning

Deep learning is a branch of machine learning that uses deep neural networks, which are multilayered neural networks that work more like the way the human brain makes decisions.

Deep neural networks have an input layer, at least three hidden layers (but typically hundreds of them), and an output layer. This is different from traditional machine learning neural networks, which only have one or two hidden layers.

These many layers make it possible for unsupervised learning to happen. They can automatically pull out features from big, unlabeled, and unstructured data sets and guess what the data means on their own.

Deep learning makes machine learning possible on a huge scale because it doesn’t need human help.

It works well for computer vision, natural language processing (NLP), and other jobs that need to quickly and accurately find complex patterns and relationships in a lot of data.

Most of the artificial intelligence (AI) apps we use today are powered by some kind of deep learning.

Generative AI

Generative AI, also known as “gen AI,” is a term for deep learning models that can make complex, unique content, like long-form text, high-quality images, realistic video or audio, and more, when a user asks them to.

At a high level, generative models store a modified version of their training data. They then use this version to make new work that is similar to the original data but not exactly the same.

In statistics, generative models have been used for a long time to analyze the data. But in the past ten years, they’ve changed so that they can now read and write more complicated types of data.

At the same time as this change, three advanced types of deep learning models appeared:

- Variational autoencoders, or VAEs, were first used in 2013. They let models make different kinds of content in reaction to a question or instruction.

- Diffusion models, which were first seen in 2014, add “noise” to pictures until they can’t be recognized. They then take away the noise to make new images when given instructions.

- Transformers (also referred to as transformer models) learn from sequenced data to create longer sequences of material, like software code commands, image frames, words in sentences, or shapes in pictures. Most of the big-name generative AI tools of today, like ChatGPT and GPT-4, Copilot, BERT, Bard, and Midjourney, are based on transformers.

Artificial Intelligence Examples

Even if the humanoid robots frequently linked with artificial intelligence (think Data from Star Trek: The Next Generation or the T-800 from Terminator 2) are not yet confirmed, you have probably used services or products that use machine learning regularly.

In its most basic form, machine learning uses algorithms trained on data sets to build models that enable computer systems to carry out operations like translating text between languages, suggesting songs, and figuring out the quickest route to a destination. Among the most prevalent applications of AI in use now are:

- ChatGPT uses large language models (LLMs) to generate text responding to questions or comments.

- Google Translate: Google Translate uses deep learning algorithms to translate text from one language to another.

- Netflix uses machine learning algorithms to create personalized recommendation engines for users based on their previous viewing history.

- Tesla: Tesla uses computer vision to power self-driving features in their cars.

Generative AI is becoming more and more accessible, making it a desirable skill for many IT positions. Google’s Introduction to Generative AI is a free, beginner-friendly online course that you might want to consider if you want to learn how to use AI in your business.

AI in the workforce

Artificial intelligence is widely used in many different businesses. Tasks that can be automated to save money and time and reduce human error risk don’t require human interaction. Here are a few examples of how AI might be used in various industries:

- Finance industry. Fraud detection is a notable use case for AI in the finance industry. AI’s capability to analyze large amounts of data enables it to detect anomalies or patterns that signal fraudulent behavior.

- Health care industry. AI-powered robotics could support surgeries close to highly delicate organs or tissue to mitigate blood loss or the risk of infection.

What is artificial general intelligence (AGI)?

A potential state in which computer systems will be able to equal or surpass human intelligence is known as artificial general intelligence, or AGI. To put it another way, AGI is “true” artificial intelligence, the kind that is portrayed in a ton of science fiction books, comic books, television series, and films.

Regarding the definition of “AI,” experts are divided on identifying “true” artificial general intelligence when it manifests. The Turing Test, also known as the imitation game, is the most well-known method for determining whether a machine is clever. Renowned mathematician, computer scientist, and cryptanalyst Alan Turing originally described the experiment in a 1950 paper on computer intelligence. Turing then went on to explain a three-player game where a human “interrogator” is required to

To complicate matters, scientists and philosophers are divided on whether artificial general intelligence (AGI) is getting closer to reality or is still far off. For instance, a recent Microsoft Research and OpenAI study contends that Chat GPT-4 is an early example of artificial general intelligence (AGI). However, many other experts doubt these assertions and claim they were produced purely for media attention [2, 3].

You can presume that when someone uses the phrase artificial general intelligence (AGI), they refer to the kind of conscious computer programs and machines frequently encountered in popular science fiction, regardless of how far we are from achieving AGI.

The 4 Types of AI

Researchers need to start developing more sophisticated definitions of intelligence and perhaps consciousness as they work to create increasingly sophisticated artificial intelligence systems. Researchers have identified four different forms of artificial intelligence to shed light on these ideas.

1. Reactive machines

The most fundamental form of artificial intelligence is seen in reactive machines. These machines only “react” to what is in front of them at any time; they are unaware of past events. As a result, they cannot complete tasks outside of their constrained context and can only execute some sophisticated functions within a very tight scope, such as playing chess.

2. Limited memory machines

Machines with little memory have a limited comprehension of the past. Compared to reactive machines, they are more capable of interacting with their surroundings. For instance, self-driving cars employ limited memory to detect and react to incoming vehicles, change course, and change speed. However, machines with limited memory cannot acquire a thorough picture of the world because their recollection of past events is restricted and only employed in a narrow band of time.

3. Theory of mind machines

“Theory of mind” machines are an early example of artificial general intelligence. These machines could comprehend other living things and produce representations of them. This reality has yet to come to pass.

4. Self-aware machines

The most advanced artificial intelligence (AI) that exists theoretically is that of machines aware of themselves and the world around them. When most people discuss reaching AGI, they mean something like this. This is a far-off reality right now.

The Benefits of AI

AI offers numerous advantages that are transforming the way we work and live. Here are the top benefits:

Automation of Repetitive Tasks

AI excels at automating mundane and repetitive tasks, freeing up human workers to focus on higher-value activities. For instance:

- Data entry and processing tasks can be handled by AI-powered tools.

- AI systems streamline workflows in industries like manufacturing and logistics, reducing costs and improving efficiency.

Enhanced Decision-Making

AI analyzes vast amounts of data at lightning speed, enabling better and faster decision-making. By identifying patterns and trends, AI helps organizations make data-driven choices. Examples include:

- Predictive analytics in finance to guide investment strategies.

- AI-powered diagnostic tools in healthcare that assist in early disease detection.

You May Also Like: Memory Limitations in AI: Common Errors and Solutions

Fewer Human Errors

Unlike humans, AI systems don’t suffer from fatigue or cognitive biases, reducing the likelihood of errors. This is particularly valuable in:

- Financial transactions, where precision is critical.

- Manufacturing, where consistent quality control is essential.

Round-the-Clock Availability and Consistency

AI systems operate 24/7 without breaks, ensuring consistent performance. This benefit is particularly evident in:

- Customer support chatbots that provide instant responses.

- Automated monitoring systems in cybersecurity that detect threats in real time.

Reduced Physical Risk

AI can perform tasks that are hazardous for humans, minimizing physical risks. For example:

- Drones equipped with AI inspect infrastructure in dangerous locations.

- AI-powered robots handle toxic substances in industrial settings.

AI Use Cases Across Industries

The versatility of AI has led to its adoption in numerous industries. Here are some prominent use cases:

Customer Experience, Service, and Support

AI enhances customer interactions by providing personalized, efficient, and timely support. Examples include:

- Chatbots that resolve customer queries instantly.

- AI-driven recommendation engines that suggest products based on user behavior.

Fraud Detection

AI’s ability to analyze large datasets and detect anomalies makes it invaluable for fraud prevention. Use cases include:

- Monitoring financial transactions for suspicious activities.

- Identifying fake reviews or accounts on online platforms.

Personalized Marketing

AI enables businesses to deliver targeted marketing campaigns by analyzing customer preferences and behaviors. For instance:

- Dynamic pricing strategies that adjust based on demand and user profiles.

- Tailored email campaigns driven by AI-powered segmentation.

Human Resources and Recruitment

AI streamlines recruitment processes and enhances employee engagement. Key applications include:

- Resume screening tools that identify the best candidates.

- AI-powered platforms that provide personalized training recommendations.

Application Development and Modernization

AI accelerates software development and modernization by automating code generation and testing. Examples include:

- AI tools that identify and fix bugs in real time.

- Platforms that optimize legacy systems for better performance.

Predictive Maintenance

AI’s predictive capabilities reduce downtime and maintenance costs in industries like manufacturing and transportation. Applications include:

- Monitoring machinery to predict failures before they occur.

- Analyzing vehicle data to schedule timely maintenance.

Weak AI vs. Strong AI

Understanding the distinction between weak AI and strong AI is essential for grasping the current state and future potential of artificial intelligence.

Weak AI

Also known as narrow AI, weak AI is designed to perform specific tasks and operates within predefined parameters. Examples include:

- Virtual assistants like Siri and Alexa.

- Recommendation systems on streaming platforms.

Strong AI

Strong AI, or artificial general intelligence (AGI), aims to replicate human cognitive abilities and perform any intellectual task. While it remains theoretical, strong AI could:

- Understand and learn from any task it encounters.

- Adapt to new situations without human intervention.

Challenges and Risks of AI

Despite its benefits, AI presents several challenges and risks that must be addressed to ensure responsible adoption.

1. Data Risks

AI systems rely heavily on data, making them vulnerable to:

- Data breaches and leaks, which compromise sensitive information.

- Biases in training data, leading to unfair or inaccurate outcomes.

2. Model Risks

AI models can be unpredictable and opaque, posing challenges such as:

- Lack of explainability, making it difficult to understand how decisions are made.

- Overfitting, where models perform well on training data but fail in real-world scenarios.

3. Operational Risks

Implementing AI can disrupt existing workflows and systems. Common issues include:

- Integration challenges with legacy systems.

- Dependence on AI systems that may fail or malfunction.

4. Ethics and Legal Risks

The ethical and legal implications of AI adoption are significant. Key concerns include:

- Privacy violations due to intrusive data collection.

- Accountability issues when AI systems cause harm or make biased decisions.

Addressing AI Challenges

To mitigate these risks, organizations must adopt best practices such as:

- Ensuring data security and privacy through robust encryption and compliance with regulations.

- Using diverse and representative datasets to minimize biases.

- Implementing explainable AI (XAI) techniques to improve transparency.

- Establishing clear accountability frameworks for AI decision-making.

The Bottom Line

Artificial intelligence holds immense potential to transform industries and improve lives. By automating repetitive tasks, enhancing decision-making, and minimizing risks, AI empowers businesses to achieve greater efficiency and innovation. However, addressing the challenges and risks associated with AI is essential for its responsible and sustainable adoption.